Front End Data Caching with the AWS Amplify Cache Utility

I’ve worked in a lot of frontend code bases over the years and I’ve found that in many cases, the “state management” technique of choice turns out to primarily be an API cache which is different from UI state. When state is held in context (directly, via Redux, whatever) for use across components it is frequently just an in-memory copy of some data that has been fetched from a backend API.

Instead of creating global “state” to hold API responses, you can use a tool like the AWS Amplify Cache Utility. This isn’t the only option, but we’ll look at this one in this article. You can find the complete API docs here.

Caching Overview

The idea of caching is to temporarily store a copy of API responses in order to reduce the need for subsequent calls to those same endpoints. Caching should be treated as an enhancement that lives in the service layer of your app. For example, when I load a route in an app, the service functions responsible for loading the data for that route should be called. Those service functions will be responsible for making HTTP calls to get data. Those service functions can be enhanced to cache responses and return the cached response on subsequent calls. Whether that response comes from the cache or a network call should be invisible to the consuming code.

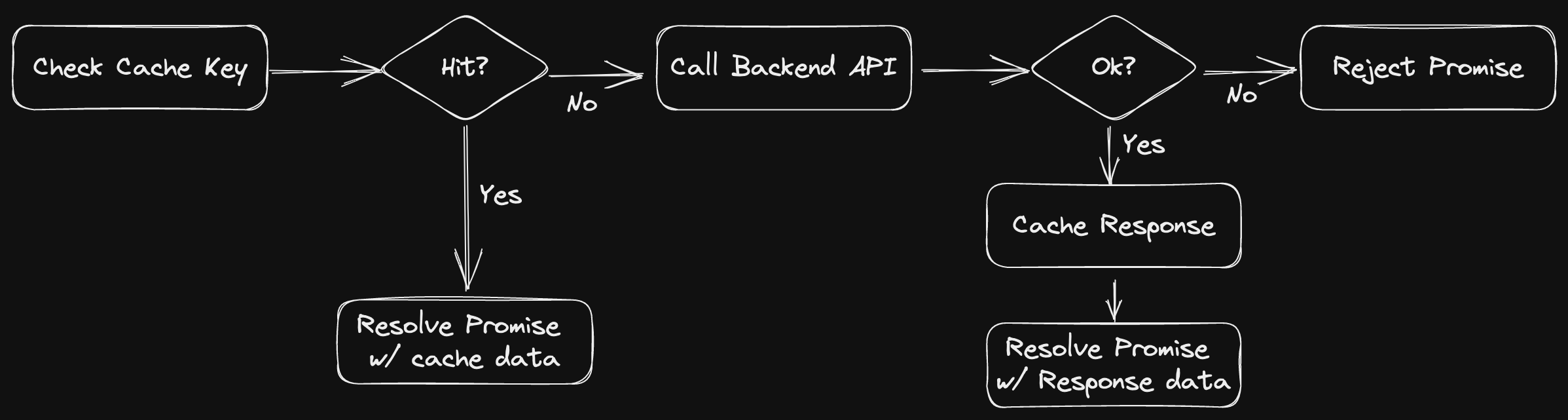

The basic flow goes like this:

- Your app calls a service function

- The service function checks for cached data using the cache key

- If the requested data already exists in the cache, return it without calling the API

- If the cache returns

undefinedmake the API call, store the response in the cache for next time, and return the response

Caching can enhance the user experience and cut down on network traffic. It is a great way to reduce lag in apps where API responses might be slow and where that data doesn’t change frequently. For data that changes frequently, your app should use should use fresh data and caching can hurt the experience by showing stale data. The point here is that you shouldn’t cache API data without first understanding the frequency of updates and the expectations for the user experience.

Add Caching to an App

Install aws amplify

npm install aws-amplifyImport the cache util

The Cache utility should be imported into your services files where you will access the Cache.

import { Cache } from 'aws-amplifySimple Caching

At its most basic, the Cache utility allows you to set and get key/value pairs. If the specified key isn’t in the cache, it will return undefined.

A standard use case that we’ll employ here is to check for the presence of cached data. If it exists, we return it and skip the API call. If it is undefined, the API call is made, the data is stored in the Cache and returned. In subsequent calls to this service, we will get cache hits and skip the API call. When an item expires or is discarded from the cache, we’ll make the API call again and stick the updated value in the cache. The cache utility handles those details, so we don’t have to add logic beyond checking for the item’s presence.

export async function getReminders() {

let reminders = Cache.getItem('/reminders')

if (reminders) {

// Cache hit - return the data from the cache

return reminders

} else {

// Cache miss - call the API

const { data } = await axios.get('/reminders')

// store the data in the cache for next request

Cache.setItem('/reminders', data.reminders)

// return the data

return data.reminders

}

}Caching with priority

When cache memory is low, the cache module will discard the least recently used items first by default. We can override this behavior and force it to discard other items first by setting a priority. Priority values range from 1-5, with 1 being the highest priority, 5 being the lowest priority. Default priority can be set on the cache instance, but the default that comes out of the box is for all cache items to have a priority of 5.

export async function getReminders() {

let reminders = Cache.getItem('/reminders')

if (reminders) {

// Cache hit - return the data from the cache

return reminders

} else {

// Cache miss - call the API

const { data } = await axios.get('/reminders')

// store the data with a specific priority

Cache.setItem('/reminders', data.reminders, { priority: 1 })

// return the data

return data.reminders

}

}Caching with expiration

You can define an expiration on a specific item by passing a time represented in milliseconds. This is useful when you know the data should be refreshed after a set amount of time.

export async function getReminders() {

let reminders = Cache.getItem('/reminders')

if (reminders) {

// Cache hit - return the data from the cache

return reminders

} else {

// Cache miss - call the API

const { data } = await axios.get('/reminders')

// Define expiration - date time in milliseconds

const expirationTime = Date.now() + 600000 // 10 minutes from now

// store the data with a specific expiration

Cache.setItem('/reminders', data.reminders, { expires: expirationTime })

// return the data

return data.reminders

}

}Handling Data Updates

When we add, update, or remove data from a dataset via API call, we need to make sure we aren’t in a situation where a service call to retrieve a list of data isn’t missing our updates. To avoid a stale cache, we can take a couple approaches. We can update the cache to reflect our changes or we can remove the dataset from cache to ensure a refetch occurs.

Updating the cache

Updating the cache can make the app more efficient, but should be handled with care to avoid situations where the front end data does not reflect the back end source of truth for the data.

In this example, we POST to create a new record and then add that new item to the cache used for fetching the entire dataset. It’s critical that when this update is applied, the resulting dataset stored in cache matches exactly what would be returned from the server. Here, the POST returns the new object from the server, and we add that to the list so we get the same response from the cache and a call to the GET.

export async function createReminder(reminder) {

// POST to add a record

const { data } = await axios.post('/api/reminders', { text: reminder })

// get the existing cached dataset handle the `undefined` case

const cachedReminders = Cache.getItem('reminders') || []

// Set the dataset in the cache including the update

Cache.setItem('reminders', cachedReminders.concat(data.reminder))

// Return the response to handle app state for the POST

return data.reminder

}Removing Items from the cache

Removing potentially stale items from the cache is the safest way to ensure accurate data, but can lead to performance issues if the back end response is known to be slow to return.

export async function createReminder(reminder) {

// POST to add a record

const { data } = await axios.post('/reminders', { text: reminder })

// Remove the list to ensure a fresh fetch on the next GET to /reminders

Cache.removeItem('reminders')

// Return the response to handle app state for the POST

return data.reminder

}Front Loading The Cache

There may be cases when the programatic creation of cache entries for specific API responses might be constructed from existing data before the API call is ever encountered in the app code. This should be a rare exception and should be handled with an abundance of care.

Take the example of a details API that gets a single item based on an ID.

We use the same general approach here. Check the cache, if no cache, fetch the data from the API.

export async function getReminder(id) {

let reminder = Cache.getItem(`/reminder/${id}`)

if (reminder) {

return reminder

} else {

const { data } = await axios.get(`/api/reminder/${id}`)

const { reminder } = data

Cache.setItem(`/reminder/${id}`, reminder)

return reminder

}

}Now, let’s say this API is slow and calling it creates a lot of lag in the UI. Let’s also say that our full dataset contains all the information we would get from this details call. We can preemptively build out the cache entries for the individual items when we get the list.

One approach would be to create an entry for each item in the cache at the point in time we get the list and cache it.

export async function getReminders() {

let reminders = Cache.getItem('reminders')

if (reminders) {

return reminders

} else {

const { data } = await axios.get('/api/reminders')

const { reminders } = data

Cache.setItem('reminders', reminders)

// Create cache entries for each of the detail calls

reminders.forEach((reminder) => {

Cache.setItem(`/reminder/${reminder.id}`, reminder)

})

return reminders

}

}This is just one example of a way to accomplish the stated goal. The specific approach here could vary based on many factors such as the frequency of data access, the size of each record, the desired retention for the data, etc.

Conclusion

You might be wondering why I chose this particular approach over service workers and the browser cache API or why I didn’t choose a different library. Service workers are super powerful, but also potentially dangerous. Once a service worker has been installed, if things weren’t done right many users can be stuck with an outdated version of it for a while. I’m not saying not to use them, but you need to exercise caution. As for other libraries, you might find some approach you like better and that’s cool. The general approach will likely be the same, it’ll be the API and available options that change. You do you 😎.